[September 2020] New Microsoft DP-200 Brain dumps and online practice tests are shared from Leads4Pass (latest Updated)

The latest Microsoft DP-200 dumps by leads4pass helps you pass the DP-200 exam for the first time! leads4pass Latest Update Microsoft DP-200 VCE Dump and DP-200 PDF Dumps, leads4pass DP-200 Exam Questions Updated, Answers corrected! Get the latest LeadPass DP-200 dumps with Vce and PDF: https://www.leads4pass.com/dp-200.html (Q&As: 207 dumps)

[Free DP-200 PDF] Microsoft DP-200 Dumps PDF can be collected on Google Drive shared by leads4pass:

https://drive.google.com/file/d/1b-hvJSM68TxBQmB_fv8lvGJusCiCZdrX/

[leads4pass DP-200 Youtube] Microsoft DP-200 Dumps can be viewed on Youtube shared by leads4pass

Microsoft DP-200 Online Exam Practice Questions

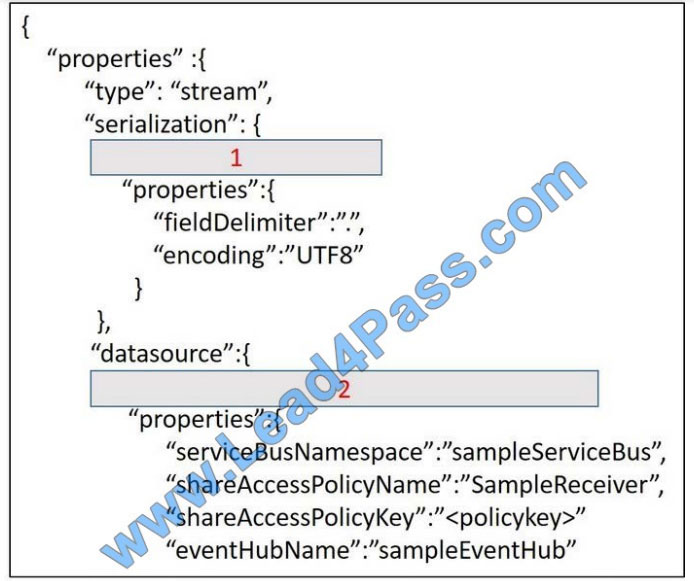

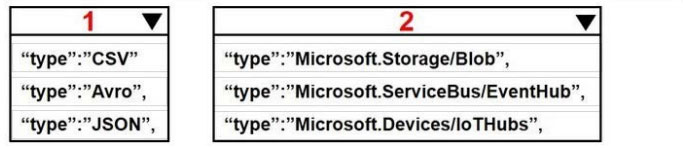

QUESTION 1

A company plans to analyze a continuous flow of data from a social media platform by using Microsoft Azure Stream

Analytics. The incoming data is formatted as one record per row.

You need to create the input stream.

How should you complete the REST API segment? To answer, select the appropriate configuration in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

QUESTION 2

You have an Azure SQL database named DB1 that contains a table named Table1. Table1 has a field named

Customer_ID that is varchar(22).

You need to implement masking for the Customer_ID field to meet the following requirements: The first two prefix characters must be exposed.

The last four prefix characters must be exposed.

All other characters must be masked.

Solution: You implement data masking and use an email function mask.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Must use Custom Text data masking, which exposes the first and last characters and adds a custom padding string in

the middle.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started

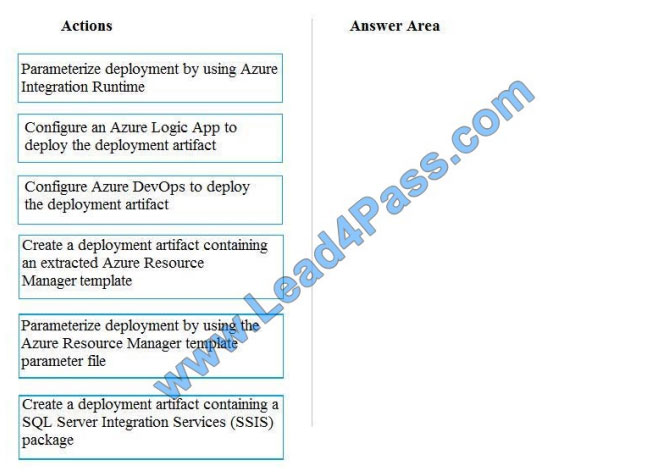

QUESTION 3

You need to ensure that phone-based polling data can be analyzed in the PollingData database.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Explanation/Reference:

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple

environments No credentials or secrets should be used during deployments

QUESTION 4

Contoso, Ltd. plans to configure existing applications to use the Azure SQL Database.

When security-related operations occur, the security team must be informed.

You need to configure Azure Monitor while minimizing administrative effort.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Create a new action group to email [email protected].

B. Use [email protected] as an alert email address.

C. Use all security operations as a condition.

D. Use all Azure SQL Database servers as a resource.

E. Query audit log entries as a condition.

Correct Answer: ACD

References: https://docs.microsoft.com/en-us/azure/azure-monitor/platform/alerts-action-rules

QUESTION 5

You manage a solution that uses Azure HDInsight clusters.

You need to implement a solution to monitor cluster performance and status.

Which technology should you use?

A. Azure HDInsight .NET SDK

B. Azure HDInsight REST API

C. Ambari REST API

D. Azure Log Analytics

E. Ambari Web UI

Correct Answer: E

Ambari is the recommended tool for monitoring utilization across the whole cluster. The Ambari dashboard shows easily

glanceable widgets that display metrics such as CPU, network, YARN memory, and HDFS disk usage. The specific

metrics shown depend on the cluster type. The “Hosts” tab shows metrics for individual nodes so you can ensure the load

on your cluster is evenly distributed.

The Apache Ambari project is aimed at making Hadoop management simpler by developing software for provisioning,

managing, and monitoring Apache Hadoop clusters. Ambari provides an intuitive, easy-to-use Hadoop management

web UI backed by its RESTful APIs.

References: https://azure.microsoft.com/en-us/blog/monitoring-on-hdinsight-part-1-an-overview/

https://ambari.apache.org/

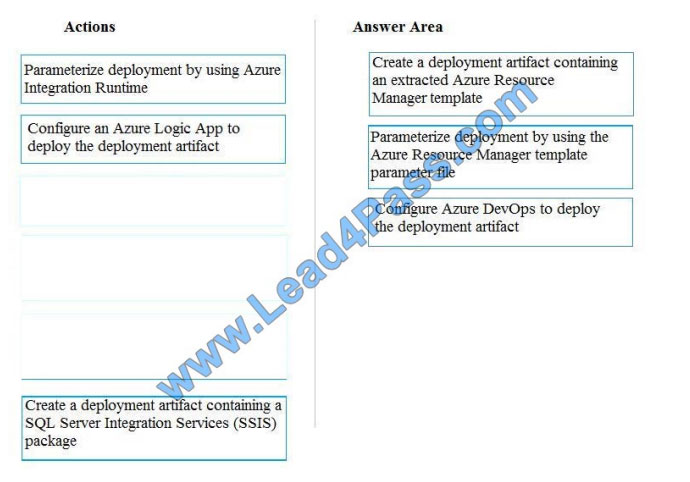

QUESTION 6

You manage the Microsoft Azure Databricks environment for a company. You must be able to access a private Azure

Blob Storage account. Data must be available to all Azure Databricks workspaces. You need to provide data

access. Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of

actions to the answer area and arrange them in the correct order.

Select and Place:

Step 1: Create a secret scope

Step 2: Add secrets to the scope

Note: dbutils.secrets.get(scope = “”, key = “”) gets the key that has been stored as a secret in a secret scope.

Step 3: Mount the Azure Blob Storage container

You can mount a Blob Storage container or a folder inside a container through Databricks File System – DBFS. The mount is a pointer to a Blob Storage container, so the data is never synced locally.

Note: To mount a Blob Storage container or a folder inside a container, use the following command:

Python dbutils.fs.mount(

source = “wasbs://@.blob.core.windows.net”, mount_point = “/mnt/”,

extra_configs = {“”:dbutils.secrets.get(scope = “”, key = “”)})

where:

dbutils.secrets.get(scope = “”, key = “”) gets the key that has been stored as a secret in a secret scope.

References:

https://docs.databricks.com/spark/latest/data-sources/azure/azure-storage.html

QUESTION 7

What should you implement to optimize SQL Database for Race Central to meet the technical requirements?

A. the sp_updatestored procedure

B. automatic tuning

C. Query Store

D. the dbcc checkdbcommand

Correct Answer: A

Scenario: The query performance of Race Central must be stable, and the administrative time it takes to perform

optimizations must be minimized.

sp_update updates query optimization statistics on a table or indexed view. By default, the query optimizer already

updates statistics as necessary to improve the query plan; in some cases, you can improve query performance by using

UPDATE STATISTICS or the stored procedure sp_updatestats to update statistics more frequently than the default

updates.

Incorrect Answers:

D: dbcc checks the logical and physical integrity of all the objects in the specified database

QUESTION 8

You plan to implement an Azure Cosmos DB database that will write 100,000 JSON every 24 hours. The database will

be replicated in three regions. Only one region will be writable.

You need to select a consistency level for the database to meet the following requirements:

Guarantee monotonic reads and writes within a session.

Provide the fastest throughput.

Provide the lowest latency.

Which consistency level should you select?

A. Strong

B. Bounded Staleness

C. Eventual

D. Session

E. Consistent Prefix

Correct Answer: D

Session: Within a single client session reads are guaranteed to honor the consistent-prefix (assuming a single “writer”

session), monotonic reads, monotonic writes, read-your-writes, and write-follows-reads guarantees. Clients outside of

the session performing writes will see eventual consistency.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

QUESTION 9

You have an Azure SQL database that has masked columns.

You need to identify when a user attempts to infer data from the masked columns.

What should you use?

A. Azure Advanced Threat Protection (ATP)

B. custom masking rules

C. Transparent Data Encryption (TDE)

D. auditing

Correct Answer: D

Dynamic Data Masking is designed to simplify application development by limiting data exposure in a set of pre-defined

queries used by the application. While Dynamic Data Masking can also be useful to prevent accidental exposure of

sensitive data when accessing a production database directly, it is important to note that unprivileged users with ad-hoc

query permissions can apply techniques to gain access to the actual data. If there is a need to grant such ad-hoc

access, Auditing should be used to monitor all database activity and mitigate this scenario.

References: https://docs.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking

QUESTION 10

Which two metrics should you use to identify the appropriate RU/s for the telemetry data? Each correct answer presents

part of the solution. NOTE: Each correct selection is worth one point.

A. Number of requests

B. Number of requests exceeded capacity

C. End to end observed read latency at the 99

D. Session consistency

E. Data + Index storage consumed

F. Avg Troughput/s

Correct Answer: AE

Scenario: The telemetry data must be monitored for performance issues. You must adjust the Cosmos DB Request

Units per second (RU/s) to maintain a performance SLA while minimizing the cost of the Ru/s.

With Azure Cosmos DB, you pay for the throughput you provision and the storage you consume on an hourly basis.

While you estimate the number of RUs per second to provision, consider the following factors:

Item size: As the size of an item increases, the number of RUs consumed to read or write the item also increases.

QUESTION 11

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution contains a dedicated

database for each customer organization. Customer organizations have peak usage at different periods during the

year.

You need to implement the Azure SQL Database elastic pool to minimize cost.

Which option or options should you configure?

A. Number of transactions only

B. eDTUs per database only

C. Number of databases only

D. CPU usage only

E. eDTUs and max data size

Correct Answer: E

The best size for a pool depends on the aggregate resources needed for all databases in the pool. This involves

determining the following:

Maximum resources utilized by all databases in the pool (either maximum DTUs or maximum vCores depending on your

choice of resourcing model).

Maximum storage bytes utilized by all databases in the pool.

Note: Elastic pools enable the developer to purchase resources for a pool shared by multiple databases to

accommodate unpredictable periods of usage by individual databases. You can configure resources for the pool based

either on the

DTU-based purchasing model or the vCore-based purchasing model. References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

QUESTION 12

You are developing a data engineering solution for a company. The solution will store a large set of key-value pair data

by using Microsoft Azure Cosmos DB.

The solution has the following requirements:

Data must be partitioned into multiple containers.

Data containers must be configured separately.

Data must be accessible from applications hosted around the world.

The solution must minimize latency.

You need to provision Azure Cosmos DB.

A. Cosmos account-level throughput.

B. Provision an Azure Cosmos DB account with the Azure Table API. Enable geo-redundancy.

C. Configure table-level throughput.

D. Replicate the data globally by manually adding regions to the Azure Cosmos DB account.

E. Provision an Azure Cosmos DB account with the Azure Table API. Enable multi-region writes.

Correct Answer: E

The scale read and write throughput globally. You can enable every region to be writable and elastically scale reads and

writes all around the world. The throughput that your application configures on an Azure Cosmos database or a

container is guaranteed to be delivered across all regions associated with your Azure Cosmos account. The provisioned

throughput is guaranteed up by financially-backed SLAs.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/distribute-data-globally

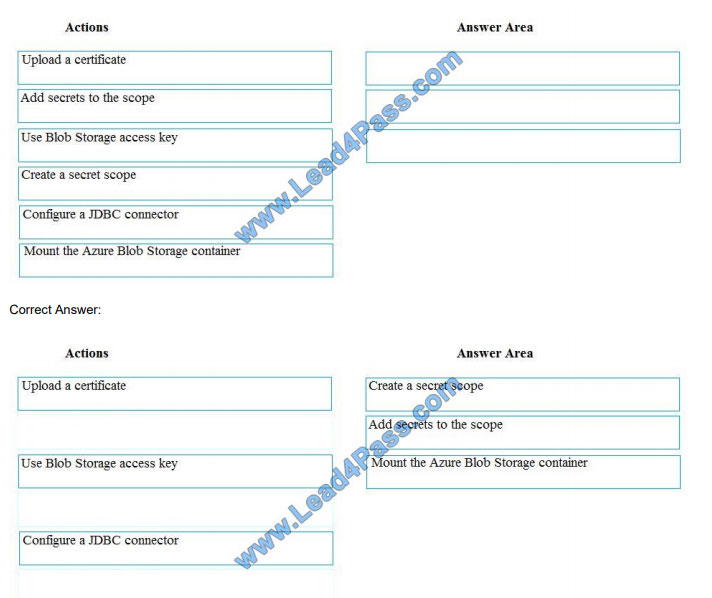

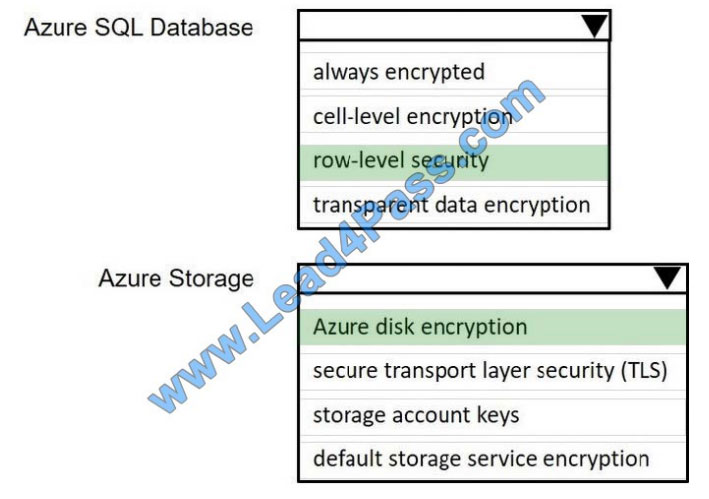

QUESTION 13

Your company uses Azure SQL Database and Azure Blob storage.

All data at rest must be encrypted by using the company\\’s own key. The solution must minimize administrative effort

and the impact on applications that use the database.

You need to configure security.

What should you implement? To answer, select the appropriate option in the answer area.

NOTE: Each correct selection is worth one point. Hot Area:

Correct Answer:

latest updated Microsoft DP-200 exam questions from the leads4pass DP-200 dumps! 100% pass the DP-200 exam! Download leads4pass DP-200 VCE and PDF dumps: https://www.leads4pass.com/dp-200.html (Q&As: 207 dumps)

Get free Microsoft DP-200 dumps PDF online: https://drive.google.com/file/d/1b-hvJSM68TxBQmB_fv8lvGJusCiCZdrX/